My work focuses on building systems where behavior is predictable, constraints are explicit, and performance tradeoffs are measurable. I’m most interested in the intersection of low-level runtimes, compiler-style tooling, and end-to-end ML pipelines, especially when the system must hold under real-time or constraints.

Memory Systems & Allocation

I design allocation frameworks with deterministic behavior, explicit routing policies, and theory-informed models of fragmentation and runtime pressure. The goal is not only speed, but also control: predictable latency, clear failure modes, and debuggable policies.

- Deterministic routing: hierarchical priority rules and policy-driven placement

- Fragmentation modeling: tracking waste and stability under churn

- Adaptive regimes: switching strategies based on runtime conditions

- Real-time constraints: bounded behavior and predictable allocation paths

Representative systems: DRMAT family, PICAS, and DIMCA-style low-level tracking allocators.

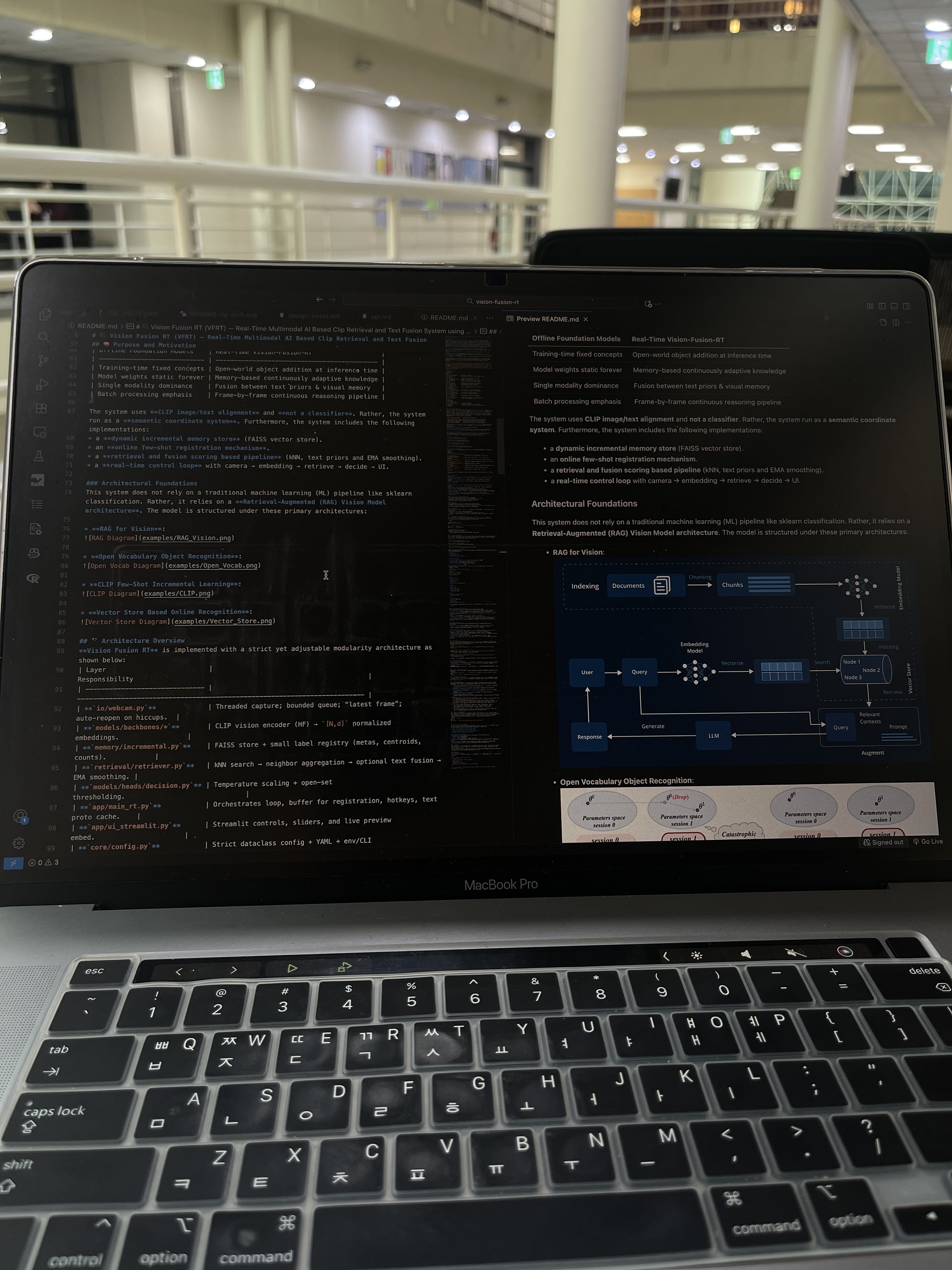

Multimodal AI Pipelines

I build end-to-end multimodal pipelines with emphasis on validation, longitudinal tracking, and interpretable metrics. I’m especially interested in systems that connect real-time signals to measurable outcomes (e.g., session metrics, trend analysis, and survey-backed validation).

- Real-time inference: streaming pipelines with stable throughput and monitoring

- Temporal modeling: LSTM / sequence modeling for state and trend estimation

- Explainability: landmark-driven analysis and correlation-based interpretation

- Validation: alignment against survey instruments and statistical evaluation

Compilers & Infrastructure

I approach complex systems with compiler-style structure: explicit intermediate representations, correctness constraints, and pass-based transformations. This helps keep systems scalable as features grow and backends diversify.

- Pass-based architecture: semantic checking, validation, and transformation stages

- Backend parity: consistent evaluation across PyTorch / JAX execution paths

- Reproducibility: clear configs, measurable metrics, and traceable outputs

- Bias: Correctness and predictability before optimization

- Metrics: Latency, Memory Footprint, Stability, Reproducibility

- Style: Explicit Invariants, Documented Assumptions, Debuggable Policies

- Open to: Internship/Working Roles, Research Collaboration, and Systems Discussions

Click images to open full view.