Summary

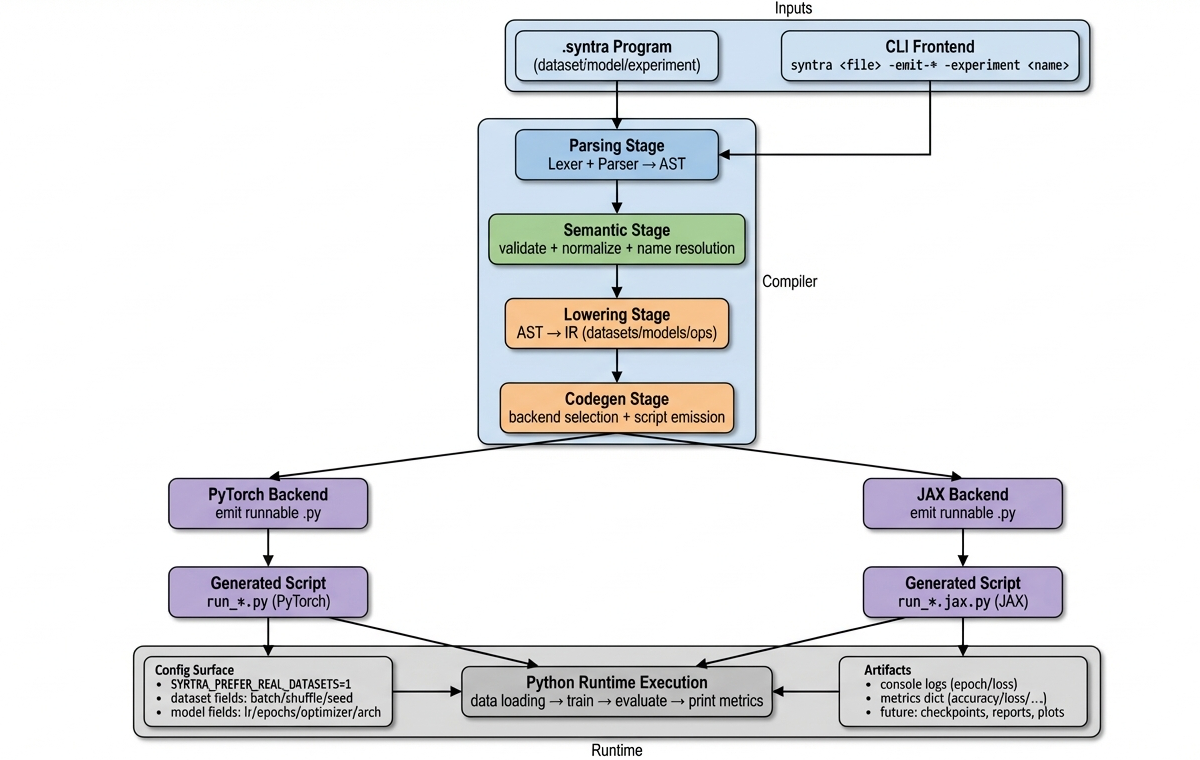

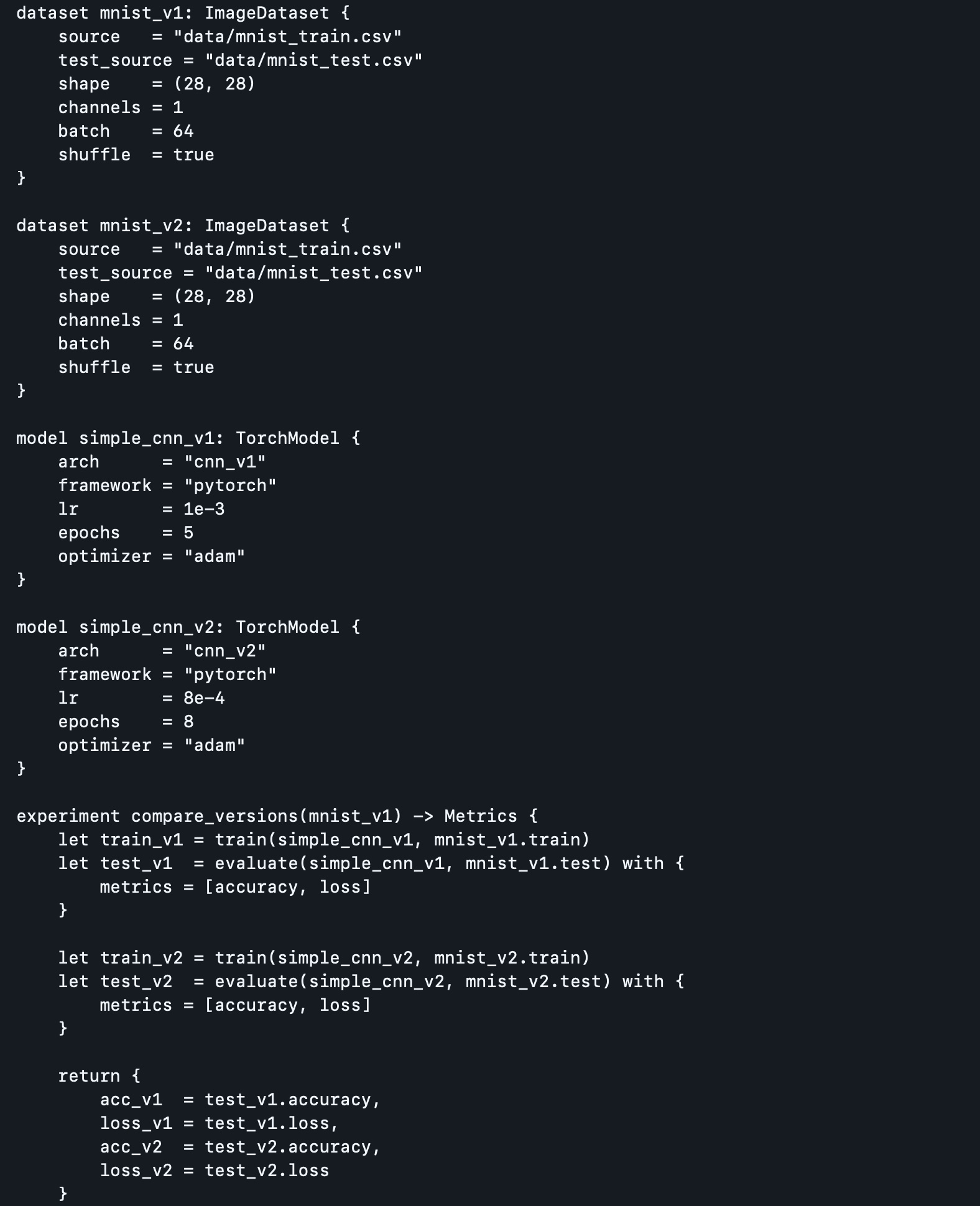

SyntraLine++ is a compiler-oriented DSL and execution pipeline that turns machine learning experiments into structured, statically validated programs whilst bridging the clarity of DSLs, the safety of compiler passes, and the flexibility of modern ML frameworks. In this project, the compiler is developed with the following properties:

- Parses

.syntraprograms and constructs a strongly typed Abstract Syntax Tree (AST). - Lowers programs into an Intermediate Representation (IR) consisting of datasets, models, and experiment pipelines.

- Performs semantic validation & normalization, including architecture collapsing, dataset–model consistency checks, hyperparameter inference, and experiment correctness.

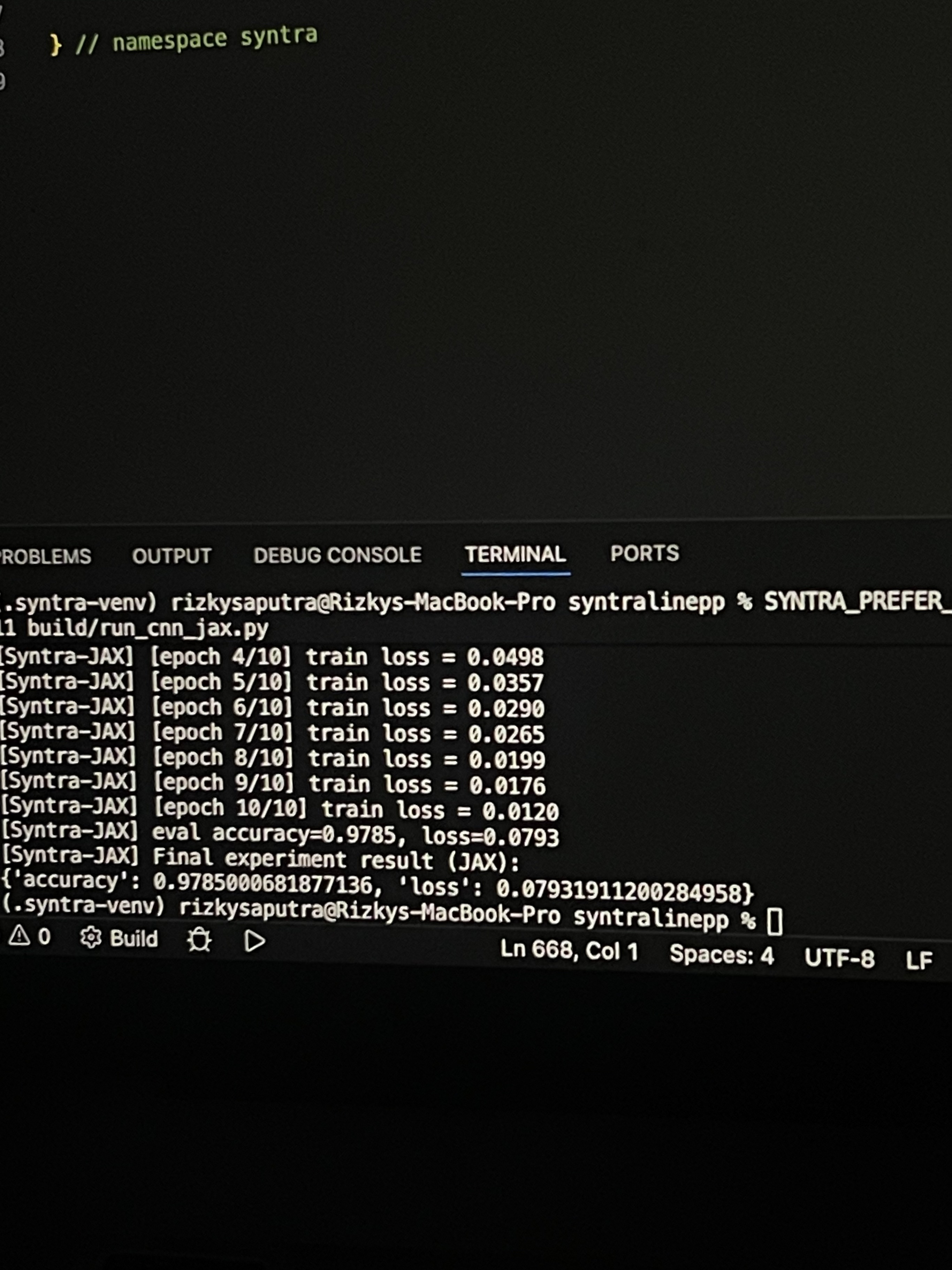

- Emits runnable execution code in either PyTorch or JAX, matching the user’s backend selection.

- Supports experimentation constructs such as automatic version comparison, hypergrid search, evaluation suites, and real-data CSV integration.

- Produces reproducible experiment outputs with structured dictionaries for downstream logging, visualization, and benchmarking.

Research Purpose

The majority of machine learning codebases encode experiments as ad-hoc scripts. SyntraLine++ pushes the workflow into a declarative program that can be:

- validated before it runs,

- normalized into canonical forms (so experiments are comparable),

- compiled into backend-specific runners (PyTorch/JAX),

- and logged with consistent artifacts.

Architecture Overview

- Frontend: DSL parsing + AST construction

- Lowering: AST → IR (datasets/models/pipelines)

- Passes: semantic checks, normalization, inference, consistency validation

- Backend emission: generate runnable pipelines for PyTorch / JAX

- Artifacts: structured outputs for eval, tracking, and reproducibility

Future Roadmaps

- Semantic checking and validation passes

- Precision / Recall / F1 metrics for evalsuite in PyTorch and JAX

- JAX backend parity with PyTorch pipeline examples

- Distributed-training hints (gradient accumulation + config propagation)

- Channel-aware and shape-aware dataset handling in both backends

Detailed Specifications

- Domain: DSL Compiler Design, Operating System, Real-Time System, Machine Learning

- Core: AST, IR, Passes, Backend

- Backends: PyTorch, JAX

- Focus: Validation, Operationalization, Reproducibility